Les Bell and Associates Pty Ltd

Study for the CISSP in Your Spare Time!

Les's Security News Blog

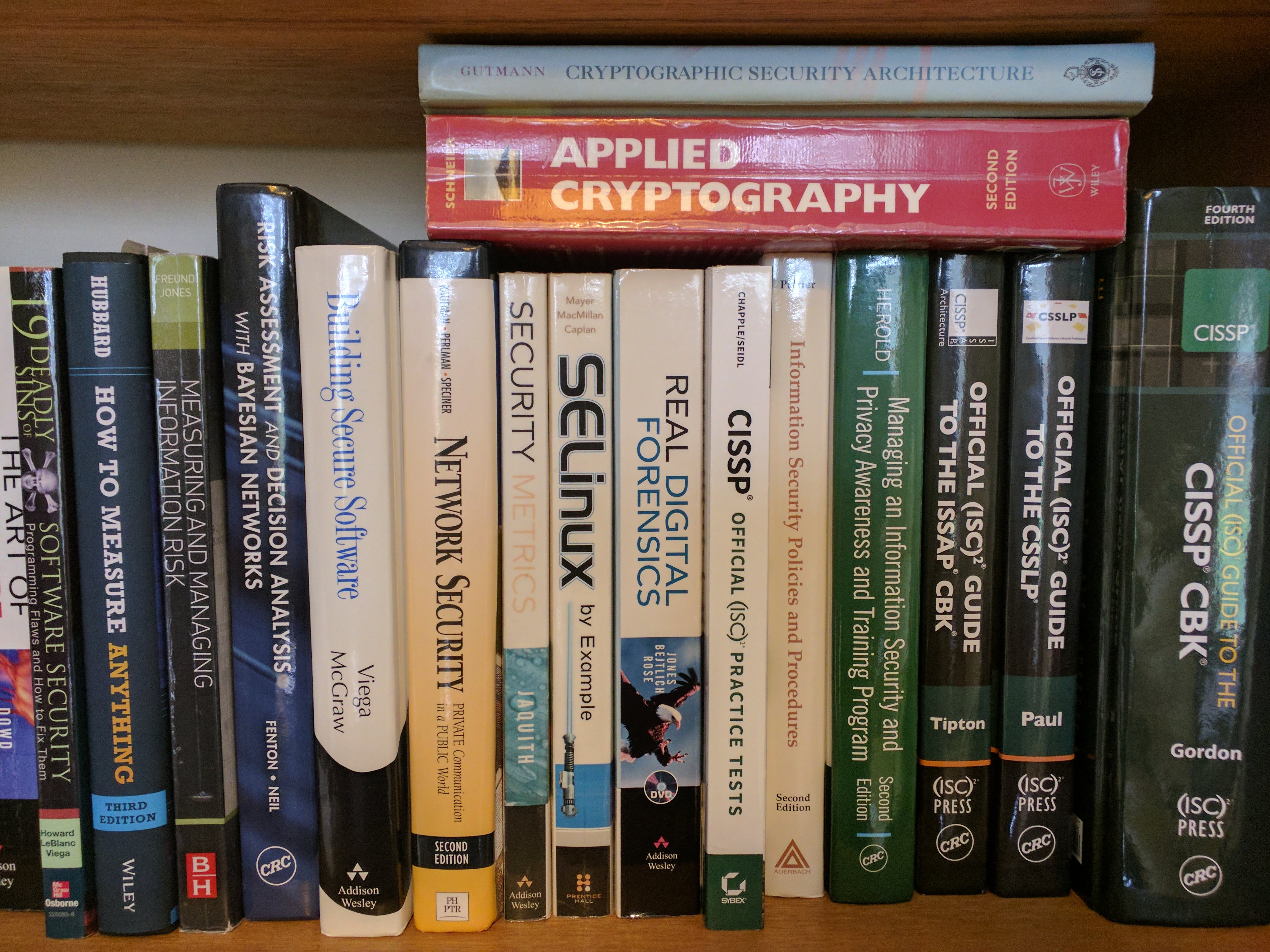

CISSP Fast Track Course

Skip available courses

Available courses

This is the support area for the SE221 CISSP Fast Track Review class, organized for delivery as 12 weekly sessions, with the first tutorial session on Wednesday 19th February at 6:00 pm. During each week, students will have some readings to complete and videos to watch before we assemble for the tutorial session to review and tie everything together.

- Lecturer: Les Bell

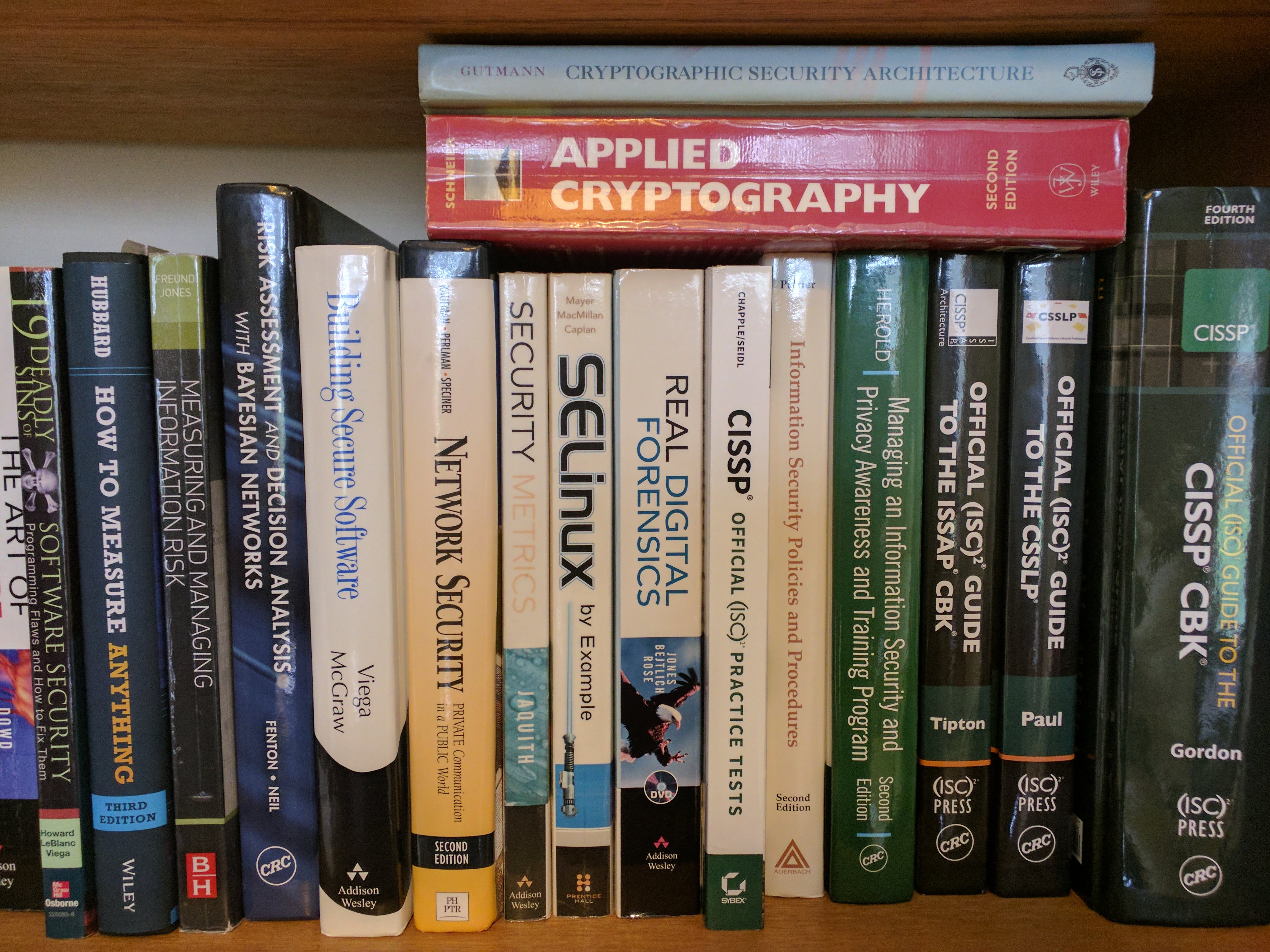

This is the support area for our CISSP Fast Track Review course. It is continually updated and revised with new content, and is organised in accordance with the last update to the CISSP Common Body of Knowledge.

- Lecturer: Les Bell

This is not really a course - rather, it is a general area for discussion between attendees in a number of courses, together with certain other non-attendees (e.g. employers and recruiters).

- Moderator: Les Bell

This course area provides detailed information to accompany a short talk we can provide for associations, clubs and groups interested in cybersecurity for small business. (At least Guest Access required)

- Speaker: Les Bell

This is the support area for our short private course, SE107. Here you will find additional resources, such as a continually-updated version of the course slides, discussion forums, self-assessment quizzes and a wiki containing supplementary reference material.

This is the support area for the SE221 CISSP Fast Track Review class, organized for delivery as 12 weekly sessions. During each week, students will have some readings to complete and videos to watch before we assemble for a tutorial session to review and tie everything together.

- Lecturer: Les Bell

This is the support area for the SE221 CISSP Fast Track Review class, organized for delivery as 12 weekly sessions. During each week, students will have some readings to complete and videos to watch before we assemble for a tutorial session to review and tie everything together.

- Teacher: Les Bell

This half-day workshop is intended to meet the needs of board members, senior managers and administrators in small and medium enterprises. Upon completion, attendees will understand the basic principles of cybersecurity, cybersecurity governance, risk management, security policies, cyber resilience, security education, training and awareness and cybersecurity operations including incident response.

- Lecturer: Les Bell

This is not a course - just a home for resources such as the wiki and forums across all our business-level cybersecurity courses. Self-enrolment is not possible; students in the relevant courses are automatically enrolled.

- Moderator: Les Bell